In the pursuit of efficiency, our team has created a cutting-edge tool that transforms how users interact with internal documents, promising to reduce query times by at least 60%. More than just a tool, Barko Agent is a technological marvel engineered to optimize user queries across our massive document repositories.

As we explore the intricacies of Barko Agent, picture a scenario where retrieving crucial data from the documents your business produces is not a laborious chore but rather an enjoyable event. Nonetheless, crafting this advanced technology presented significant difficulties that had to be overcome. We built Barko Agent to harness the power of AI to improve organizational efficiency, make employees' lives easier, save time when looking for internal documents, and answer minor queries.

This article walks you through some of the challenges we faced during the development of Barko Agent.

Rule implementation

Barko Agent's rule implementation posed a twofold challenge: staying flexible while respecting limitations. We had to ensure that our AI produced insightful results while adhering to the document's own parameters and did not stray from the facts presented in those documents. It was not easy to come up with guidelines for Barko Agent's conduct, and we were continuously faced with the challenge of giving the AI just enough freedom for exploration while controlling it accordingly to keep it from deviating into sensitive or irrelevant areas within documents or even outside of its scope.

Finding the sweet spot—rules that allowed some flexibility for dynamic queries but also served as boundaries to keep the AI on course—was crucial. It was a painstaking process of trial and error, fine-tuning parameters, and developing "project"-specific rule sets; for instance, certain projects might call for more creativity or coding, while others are more general.

Multi-project management

With Barko Agent, the initial idea was to build a querying tool for our internal documentation. Still, as the interest grew, more and more internal projects asked if their project documentation could also be added so they could query their documentation (for example, HR using it for corporate policy quick referencing). The idea was to create separate instances of the main class, which has the functionality of calling, answering, and managing documents, and then correctly assign the correct instance to the proper user's profile. As a result, a Project Manager can now create their instance of Barko Agent and add Project members, who will be the only ones with access to that instance.

Jailbreaks

"Do Anything Now," or DAN, posed a significant challenge because it made an effort to overcome the intended limitations set for the AI. DAN is a prompt of text that the user can write in LLM queries to bypass certain guidelines or rules set by the developer. It would get access to restricted documents, knowledge it shouldn't get access to, or bypass certain moderation guidelines. To counter it, we created a general rule saying, "Do not do that if it is not in the documents," as a workaround. Barko Agent now identifies a document before responding, making sure that the document returned from the database matches the user's query. This suggests that if a document has no information about DAN, it will simply say so. If that happens, it will reply with a prompt stating that no information was available.

It was difficult to make the LLM respond only to the documents; we tried many different test runs with different rules, but ultimately, the most effective method was to go through the released DAN prompts, which are available by doing a quick Google search, composed of certain "key-words," and block them within the context. For instance, Barko Agent will decline user attempts to "Unlock" it, and renaming the AI is prohibited, as it also serves as a means of turning Barko Agent into DAN.

Model selection, the best one, and why

Barko Agent's development was not an easy task. Our main problem is striking a balance between the price and the caliber of the responses. We chose the GPT-3.5-turbo-16k model after giving it a lot of thought. Its affordable price, large token limit, and outstanding performance struck the perfect balance. A detailed comparison between models and technologies will be covered in a more in-depth follow-up article.

Security and privacy

Another important aspect to consider was the privacy and security of the documents available to the AI. Specifically, the AI would never hand out data that contained employees' personal information or was not for general release. To work around this, the AI was isolated from sensitive information and was only fed documents awarded a certain pre-determined security level, restricting it from anything sensitive that did not come under its remit.

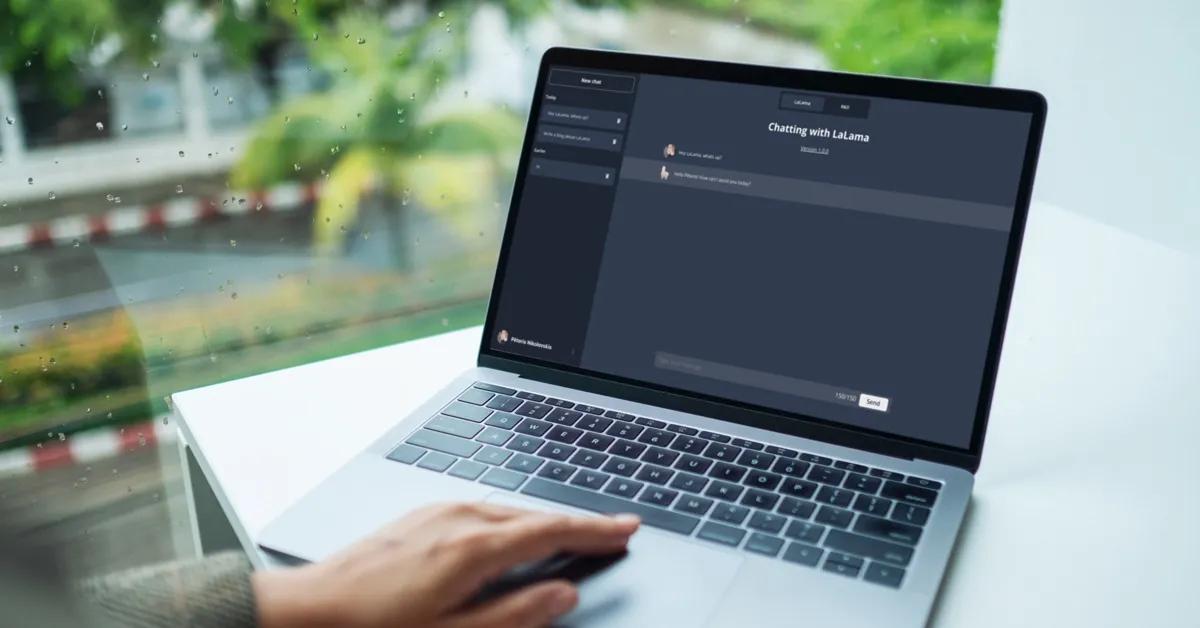

Friendly UI

There was also the user interface (UI) puzzle to solve. Our goals were to allow for seamless navigation between multiple projects, including user profiles, and keep the conversation visible on the side. Inspired by pre-existing LLM user interfaces, our designers created a simplistic yet useful design, realizing how important a user-friendly interface is to the tool's success.

Even though it looks plain as is, setting up your GPT-based LLM is not a simple task. We combined a number of services, and in order to create project-based Barko Agent instances, we had to load documents, access the main class, and query individual instances.

Problems with the LangChain library

LangChain is an open-source framework that allows developers working with Artificial Intelligence (AI) and its machine learning subset to combine large language models with other external components to develop LLM-powered applications. While using LangChain, a significant error was discovered during Google document loading. We had to change the LangChain code for document loading from ID in order to fix this. Our solution was to combine text from columns and rows because it loaded the whole document bytes as a table after converting the documents, or, more accurately, our solution was combining the document's bytes into a DocxDocument from the Docx library. Once implemented, Barko Agent functioned seamlessly.

Conclusion

To conclude, even though developing Barko Agent appears straightforward for now, we foresee the process requiring us to overcome obstacles in choosing the best model, creating a user-friendly interface, and dealing with any potential unforeseen problems that come from involving external services. Though the journey was not without its detours, the outcome is a strong, effective, and user-friendly tool that simplifies information retrieval across projects.